I own a base model MacBook Pro (16inch, 2019). It comes with a AMD Radeon Pro 5300M GPU inside. Since I bought this laptop, I've struggled to utilize its GPU for machine learning. I've tried:

Eventually, I just gave up for a while and switched to using Google Colab. I use the paid version of Colab, which is relatively cheap and provides a pretty good service.

There actually is a third method to using my MacBook's GPU, which I stumbled upon completely accidentally. It's much easier to set up and more convenient to run than any of the other options. You can continue using your native TensorFlow backend and not have to switch to Linux.

You simply need to:

And that's all!

For more detailed steps, you can follow the instructions Apple provides or follow my more condensed version here:

conda create --name metal python=3.8conda activate metal SYSTEM_VERSION_COMPAT=0 python -m pip install tensorflow-macosSYSTEM_VERSION_COMPAT=0 python -m pip install tensorflow-metal python --m pip install ipykernelpython --m pip install matplotlibpython --m pip install tensorflow-datasets First, let's import TensorFlow and ensure the GPU shows up as a visible device:

import tensorflow as tf

print(f"Tensorflow version: {tf.__version__}")

tf.config.list_physical_devices()

Tensorflow version: 2.9.2

[PhysicalDevice(name='/physical_device:CPU:0', device_type='CPU'), PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

Let's download a small dataset and get it ready for training:

import tensorflow_datasets as tfds

from pathlib import Path

BATCH_SIZE = 32

NUM_CLASSES = 3

# dataset will be placed here

data_dir = './datasets/'

Path('./datasets/').mkdir(parents=True, exist_ok=True)

(ds_train, ds_test), ds_info = tfds.load(

"beans",

split=["train", "test"],

batch_size=BATCH_SIZE,

with_info=True,

as_supervised=True,

shuffle_files=True,

data_dir=data_dir

)

ds_train = ds_train.map(lambda image, label: (tf.image.resize_with_crop_or_pad(image, 224, 224),

tf.cast(label, tf.int32)))

ds_test = ds_test.map(lambda image, label: (tf.image.resize_with_crop_or_pad(image, 224, 224),

tf.cast(label, tf.int32)))

print(f"Batch shape: {list(ds_train.element_spec[0].shape)}")

print(f"Num train images: {len(ds_train) * BATCH_SIZE}")

print(f"Num test images: {len(ds_test) * BATCH_SIZE}")

Batch shape: [None, 224, 224, 3] Num train images: 1056 Num test images: 128

Let's define the model:

model = tf.keras.applications.resnet50.ResNet50(

include_top=True,

weights=None,

input_tensor=None,

input_shape=None,

pooling=None,

classes=NUM_CLASSES

)

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy()

model.compile(optimizer="adam", loss=loss_fn, metrics=["accuracy"])

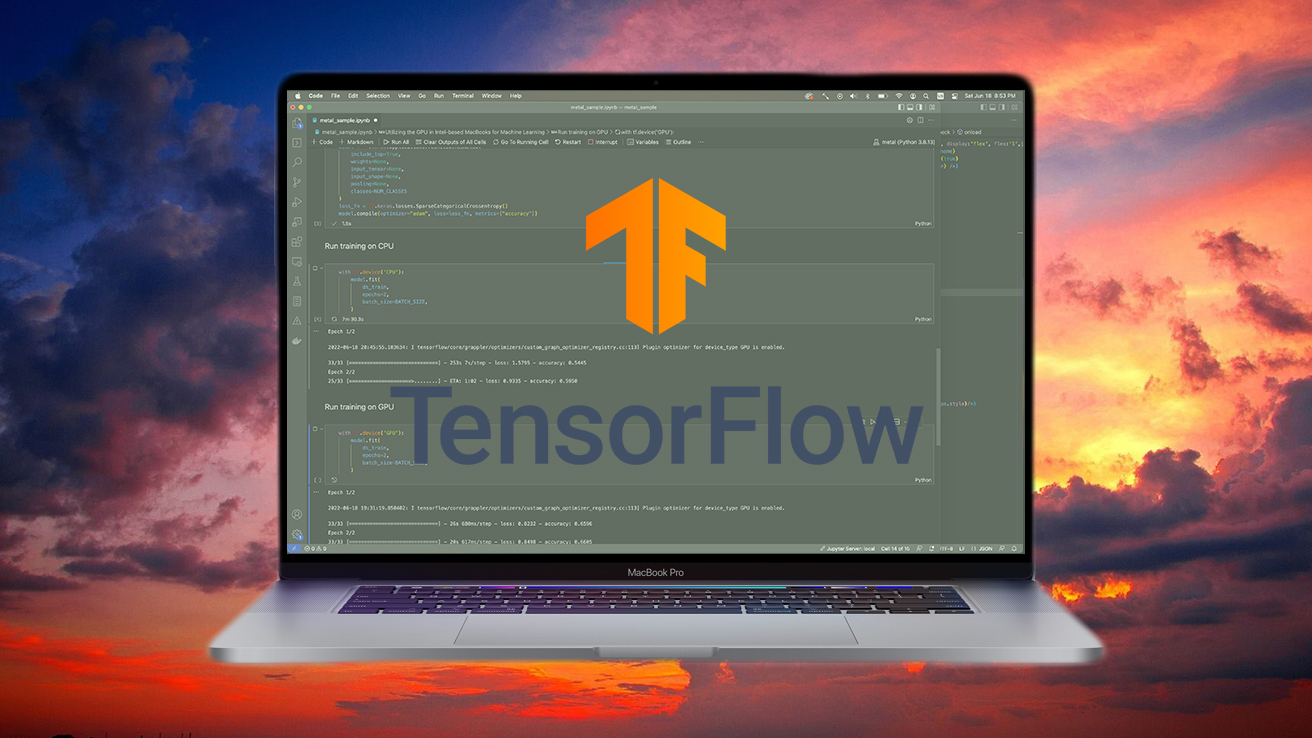

with tf.device('CPU'):

model.fit(

ds_train,

epochs=2,

batch_size=BATCH_SIZE,

)

Epoch 1/2

2022-06-18 20:45:55.103634: I tensorflow/core/grappler/optimizers/custom_graph_optimizer_registry.cc:113] Plugin optimizer for device_type GPU is enabled.

33/33 [==============================] - 253s 7s/step - loss: 1.5795 - accuracy: 0.5445 Epoch 2/2 33/33 [==============================] - 260s 8s/step - loss: 0.9050 - accuracy: 0.6122

with tf.device('GPU'):

model.fit(

ds_train,

epochs=2,

batch_size=BATCH_SIZE,

)

Epoch 1/2

2022-06-18 20:54:25.202441: I tensorflow/core/grappler/optimizers/custom_graph_optimizer_registry.cc:113] Plugin optimizer for device_type GPU is enabled.

33/33 [==============================] - 31s 758ms/step - loss: 0.8255 - accuracy: 0.6634 Epoch 2/2 33/33 [==============================] - 45s 1s/step - loss: 0.8030 - accuracy: 0.6721